A batteries-included workflow engine that doesn't get in your way.

Define workflows in simple YAML, execute them anywhere with a single binary, compose complex pipelines from reusable sub-workflows, and distribute tasks across workers. Do all this without requiring databases, message brokers, or code changes to your existing scripts.

What is Dagu?

Dagu is a batteries-included workflow engine that doesn't get in your way. Define workflows in simple YAML, execute them anywhere with a single binary, compose complex pipelines from reusable sub-workflows, and distribute tasks across workers. Do all this without requiring databases, message brokers, or code changes to your existing scripts.

Built for developers who want powerful workflow orchestration without the operational overhead.

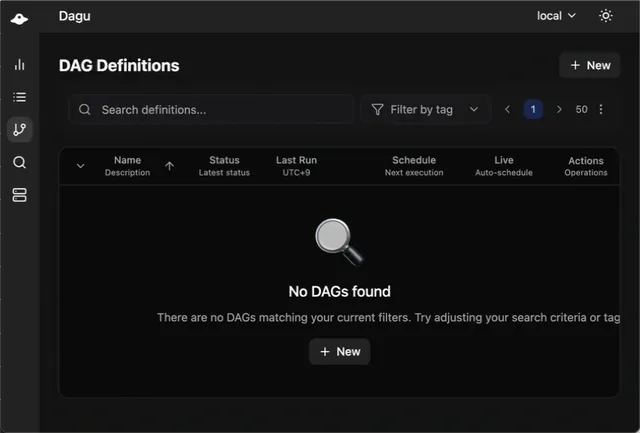

Web UI Preview

CLI Preview

Why Dagu?

🚀 Zero Dependencies

Single binary. No database, no message broker. Deploy anywhere in seconds, from your laptop to bare metal servers to Kubernetes. Everything is stored in plain files (XDG compliant), making it transparent, portable, and easy to backup. Learn more

🧩 Composable Nested Workflows

Build complex pipelines from reusable building blocks. Define sub-workflows that can be called with parameters, executed in parallel, and fully monitored in the UI. See execution traces for every nested level. No black boxes. Learn more

🌐 Language Agnostic

Use your existing scripts without modification. No need to wrap everything in Python decorators or rewrite logic. Dagu orchestrates shell commands, Docker containers, SSH commands, or HTTP calls. Use whatever you already have. Learn more

⚡ Distributed Execution

Built-in queue system with intelligent task routing. Route tasks to workers based on labels (GPU, region, compliance requirements). Automatic service registry and health monitoring included. No external coordination service needed. Learn more

🎯 Production Ready

Not a toy. Battle-tested error handling with exponential backoff retries, lifecycle hooks (onSuccess, onFailure, onExit), real-time log streaming, email notifications, Prometheus metrics, and OpenTelemetry tracing out of the box. Learn more

🎨 Modern Web UI

Beautiful UI that actually helps you debug. Live log tailing, DAG visualization with Gantt charts, execution history with full lineage, and drill-down into nested sub-workflows. Dark mode included. Learn more

Quick Start

1. Install dagu

# Install to ~/.local/bin (default, no sudo required)

curl -L https://raw.githubusercontent.com/dagu-org/dagu/main/scripts/installer.sh | bash

# Install specific version

curl -L https://raw.githubusercontent.com/dagu-org/dagu/main/scripts/installer.sh | bash -s -- --version v1.17.0

# Install to custom directory

curl -L https://raw.githubusercontent.com/dagu-org/dagu/main/scripts/installer.sh | bash -s -- --install-dir /usr/local/bin# Install latest version to default location (%LOCALAPPDATA%\Programs\dagu)

irm https://raw.githubusercontent.com/dagu-org/dagu/main/scripts/installer.ps1 | iex

# Install specific version

& ([scriptblock]::Create((irm https://raw.githubusercontent.com/dagu-org/dagu/main/scripts/installer.ps1))) v1.24.0docker run --rm \

-v ~/.dagu:/var/lib/dagu \

-p 8080:8080 \

ghcr.io/dagu-org/dagu:latest \

dagu start-allbrew update && brew install dagunpm install -g --ignore-scripts=false @dagu-org/dagu2. Create your first workflow

Note: When you first start Dagu with an empty DAGs directory, it automatically creates example workflows to help you get started. To skip this, set

DAGU_SKIP_EXAMPLES=true.

cat > ./hello.yaml << 'EOF'

steps:

- echo "Hello from Dagu!"

- echo "Running step 2"

EOF3. Run the workflow

dagu start hello.yaml4. Start the Web UI

dagu start-allVisit http://localhost:8080

Key Features

Composable Nested Workflows

Break complex workflows into reusable, maintainable sub-workflows:

description: |

data-pipeline: Extract, transform, and load data daily with retries and parallelism.

schedule: "0 2 * * *" # Daily at 2 AM

type: graph

steps:

# Extract raw data

- name: extract

command: python extract.py --date=${DATE}

output: RAW_DATA

# Transform in parallel for each data type

- name: transform

call: transform-data

parallel:

items: [customers, orders, products, inventory]

params: "TYPE=${ITEM} INPUT=${RAW_DATA}"

depends: extract

# Load to warehouse with retry

- name: load

command: python load.py --batch=${RAW_DATA}

depends: transform

retryPolicy:

limit: 3

intervalSec: 10

backoff: true

---

# Reusable sub-workflow

name: transform-data

params: [TYPE, INPUT]

steps:

- name: validate

command: python validate.py --type=${TYPE} --input=${INPUT}

- name: transform

command: python transform.py --type=${TYPE} --input=${INPUT}

output: TRANSFORMED

- name: quality-check

command: python quality_check.py --data=${TRANSFORMED}Every sub-workflow run is tracked and visible in the UI with full execution lineage.

Distributed Execution with Worker Labels

Route tasks to specialized workers without managing infrastructure:

description: |

ML pipeline that preprocesses data on CPU workers and trains models on GPU workers.

workerSelector:

gpu: "true"

cuda: "11.8"

memory: "64G"

steps:

- name: preprocess

command: python preprocess.py

workerSelector:

cpu-only: "true" # Override for CPU task

- name: train

command: python train.py --gpu

# Uses parent workerSelector (GPU worker)

- name: evaluate

call: model-evaluation

params: "MODEL_PATH=/models/latest"Start workers with labels:

# GPU worker

dagu worker --worker.labels gpu=true,cuda=11.8,memory=64G

# CPU worker

dagu worker --worker.labels cpu-only=true,region=us-east-1Automatic service registry, health monitoring, and task redistribution included.

Advanced Error Handling

Production-grade retry policies and lifecycle hooks:

steps:

- name: api-call

executor:

type: http

config:

method: POST

url: https://api.example.com/process

timeout: 30s

retryPolicy:

limit: 5

intervalSec: 2

backoff: true # Exponential backoff

continueOn:

failure: true # Keep going even if this fails

handlerOn:

success:

command: slack-notify.sh "✅ Pipeline succeeded"

failure:

executor:

type: mail

config:

to: oncall@company.com

from: alerts@company.com

subject: "🚨 ALERT: Pipeline Failure - ${DAG_NAME}"

message: |

Pipeline failed.

Run ID: ${DAG_RUN_ID}

Check logs: ${DAG_RUN_LOG_FILE}

exit:

command: cleanup-temp-files.sh # Always runsBuiltin Executors

Execute task in different ways.

steps:

# Shell command

- name: local-script

command: ./deploy.sh

# Docker container

- name: data-processing

executor:

type: docker

config:

image: python:3.11

autoRemove: true

command: python process.py

# Remote SSH

- name: remote-deploy

executor:

type: ssh

config:

user: ubuntu

host: prod-server.internal

command: sudo systemctl restart app

# HTTP API call

- name: trigger-webhook

executor:

type: http

config:

method: POST

url: https://hooks.slack.com/services/xxx

# JSON processing

- name: parse-config

executor:

type: jq

config:

query: '.environments[] | select(.name=="prod")'

command: cat config.jsonUse Cases

🔄 Data Pipelines

Extract, transform, and load data with complex dependencies. Use nested workflows for reusable transformations and parallel processing.

🤖 ML Workflows

Train models on GPU workers, preprocess on CPU workers, and orchestrate the entire lifecycle with automatic retries and versioning.

🚀 Deployment Automation

Multi-environment deployments (dev → staging → prod) with approval gates, rollback support, and notification integrations.

📊 ETL/ELT

Replace brittle cron jobs with visible, maintainable workflows. Visualize data lineage and debug failures with live logs.

🔧 Legacy System Migration

Wrap existing shell scripts and Perl code in Dagu workflows without rewriting them. Add retry logic, monitoring, and scheduling incrementally.

☁️ Multi-Cloud Orchestration

Route tasks to workers in different cloud regions based on compliance requirements, data locality, or cost optimization.